Topics

The Transformer architecture is a groundbreaking sequence-to-sequence model that revolutionized the field by primarily relying on the attention mechanism to model dependencies in data. This marked a significant shift from earlier approaches that heavily used recurrence (as in RNNs, LSTMs, GRUs). The Transformer’s ability to process sequences in parallel led to faster training times and superior performance on various tasks, most notably in NLP.

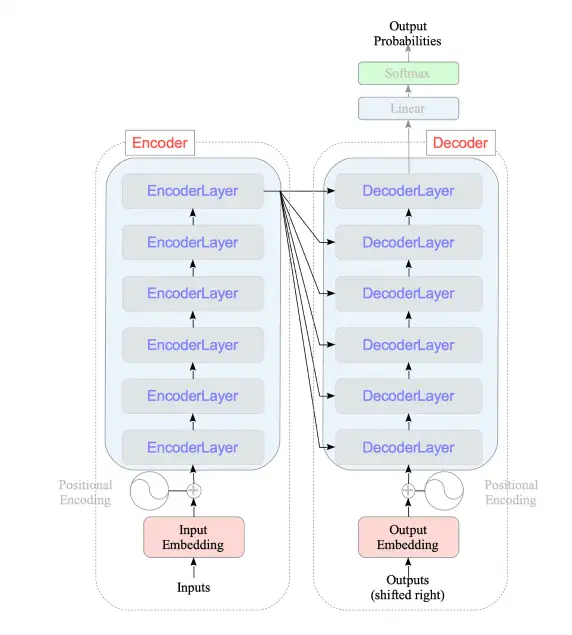

The core of the Transformer lies in its encoder-decoder architecture. The encoder processes the input sequence to create a representation, and the decoder uses this representation to generate the output sequence. Key conceptual parts include:

- attention mechanism: central component that allows the model to weigh the importance of different parts of the input sequence when processing or generating output

- transformer encoder block: A stack of layers that takes the input sequence and transforms it into a sequence of continuous representations

- transformer decoder block: Another stack of layers that generates the output sequence, conditioned on the encoder’s output

- Input Representation: How discrete input tokens (text for example) are converted into vector representations, including token embedding for sequence models and encoding sequence position without recurrence

- Output Generation: The process of converting the decoder’s final representation into a sequence of output tokens, involving output projection and softmax in transformer

The Transformer’s versatility extends beyond NLP. By adapting the input processing and output layers, it has become a fundamental building block in various domains, including computer vision (e.g., vision transformer), audio processing, etc. Its ability to model global dependencies effectively through attention makes it a powerful tool for a wide range of AI applications.