Topics

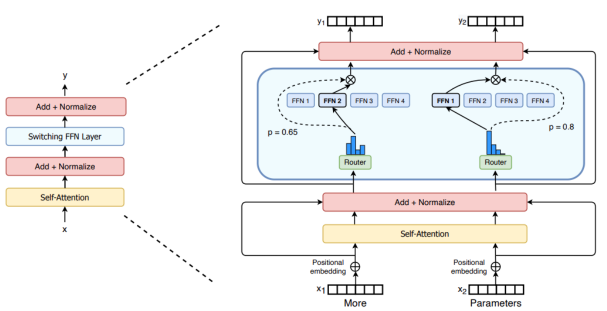

A landmark architecture from 2021 by Google, with 1.6T parameters, that like GShard, replaces the FFN layers with MoE layer ⎯ a Switch Transformer layer that takes 2 different tokens as inputs and has 4 experts. During token routing, instead of Top-2, it uses simple single-expert strategy.

It also introduced the concept of router z-loss (along with other optimizations), to stabilize training without quality degradation by penalizing large logits entering the gating network. Overall, this architecture achieves 4x pre-train speedup over T5-XXL.