Topics

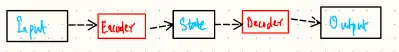

Encoder-decoder architectures handle variable-length input/output sequences, making them suitable for seq2seq modeling tasks like machine translation. The encoder compresses input into a fixed-shape representation (memory), while the decoder generates output from this memory.

In transformer (a specific implementation), both components are stacks of identical layers. The encoder processes the full input using self-attention and produces contextualized representations. The decoder generates output autoregressively using masked self-attention (for past tokens) and cross-attention (to reference encoder memory).

The architecture isn’t limited to sequence tasks - variational autoencoder uses it for image generation. The separation of encoding/decoding allows handling varying sequence lengths and complexities.