Topics

Since MoEs comprise of many experts, total number of parameters is larger than the effective number of parameters. During training or fine-tuning, this causes challenges related to overfitting. Some ways to tackle this are via higher regularization:

- Higher dropout within the experts

- Token dropping

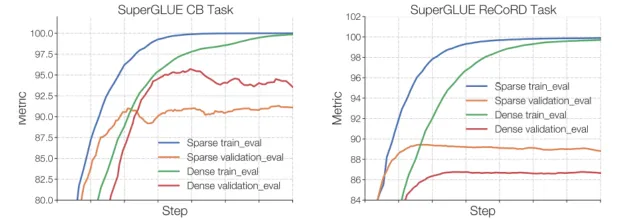

For small tasks, such as SuperGLUE CB, overfitting is high, while for larger tasks such as SuperGLUE ReCoRD, MoE performs well.