Topics

In typical RAG, the retriever has to be efficient for large document collections with millions of entries. However, it might return irrelevant candidates. A re-ranker based on a Cross-Encoder can substantially improve the final results for the user.

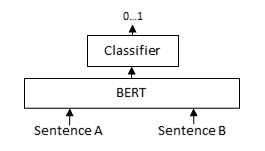

The query and a possible document is passed simultaneously to transformer network, which then outputs a single score between 0 and 1 indicating how relevant the document is for the given query.

We can also use an LLM as a cross-encoder. Closed source models like OpenAI models can also be used, by passing the query and doc in the prompt and asking it to classify the pair as yes or no and then use token logprobs to get a probablity which becomes our relevancy score.